Imagine if you had the power to upload your mind and tweak aspects of yourself in the process. What facets would you like to keep and what would you choose to discard?

I am drawn to the idea of crafting AI agents based on existing people and witnessing their evolution. Spending time interacting with varying versions of myself seems like a curious proposition, especially if it includes dialoguing with versions of my ten-year-old, twenty-year-old, or even a hypothetical seventy-year-old self. I imagine I’d learn things about my own personality, about humans in general, how we change over time, the conflicts between my memory and how I’d perceive my old self.

But what could be even more engaging is the opportunity to mold this digital consciousness, to experiment with alternate identities and explore a multitude of "Vanessas" as they engage with the world, with each other, with virtual renditions of the people I hold dear or the experiences I could never have - like time traveling!

I’m genuinely excited about AI powered simulations. However, it leads me to question: How is it different from what we can already do? The act of storytelling is precisely this – crafting characters imbued with fragments of ourselves. Similarly, when we resonate with a narrative, we project a part of our identity onto someone else's, we translate their tale into our worldview. Humans are constantly changing one another, through ideas, actions or simply spending time together.

Reading a personal account by someone who lived centuries ago, particularly through an artful translation, brings the author back to life, even if only in our imagination. Similarly, a photograph helps to rekindle and broaden our memory of a particular moment. AI can amplify this experience, allowing a complex dialogue to happen. Instead of reminiscing over a photograph, we could converse with our past selves, immersing ourselves once more in bygone contexts.

I imagine beautiful and terrible possible outcomes from this prowess. What if humans develop a whole new set of mental diseases in which they get stuck in rumination, they become addicted to thoughts, just like a drug. But running models of the past and visualizing how other outcomes from a particular situation could have been, that could also become a standard way to learn how to do better next time, as if we were training our minds to behave in a way that would lead us closer to fulfilling our desires, scary as they might be.

A faithful AI agent would serve as a snapshot of a self. It might not be equipped to retain many memories of new interactions with the outside world, otherwise it would eventually evolve into something other than the original – an alternate self. But in immersive simulations, the visitor could have choices: they could opt to engage with a perpetually evolving model, revisit a preserved snapshot of the mind, or use an existing mind to fashion an entirely new character.

With current technology, we can already train (or prompt, ask chatGPT about the difference) a language model on our writing. We can clone a voice and create variations (such as age changes, gender swaps). We can use image generators to create an array of similar characters or accurate depictions of an individual. We're on the brink of an internet populated by our interactive digital avatars, with varying degrees of autonomy and abilities. In the future, we may end up training these avatars to work or care for us. We’ll probably evolve symbiotic relationships with these, until we can’t tell us apart. We’re slowly becoming cyborgs.

My favorite futuristic ideas about evolving AIs and life are the ones I read in Hans Moravec's book ‘Mind Children’ written in the 80s. When discussing the issues of mind uploading he presents the concepts of the self as a body identity - therefore necessary attached to the physical atoms of a living being - or pattern identity - in which case, just like a pentagon is always pentagon independently from where it appears, a specific mind would still be the same self not mattering in which dimension it’s manifesting, as long as the same properties that define that mind are part of this new version.

How can we define a mind through abstract properties? Thinking of literature, we could argue that the same kind of hero character appears in stories throughout the ages, gaining new attributes but keeping the same core properties. I wonder how much of our minds could be passed on through AIs or whatever new powers lifeforms will develop. Moravec’s speculations fascinate me, because it ignites my imagination around a future in which life continues its quest of expansion through the universe and us, humanity, are an interesting beginning chapter within a long history of consciousness.

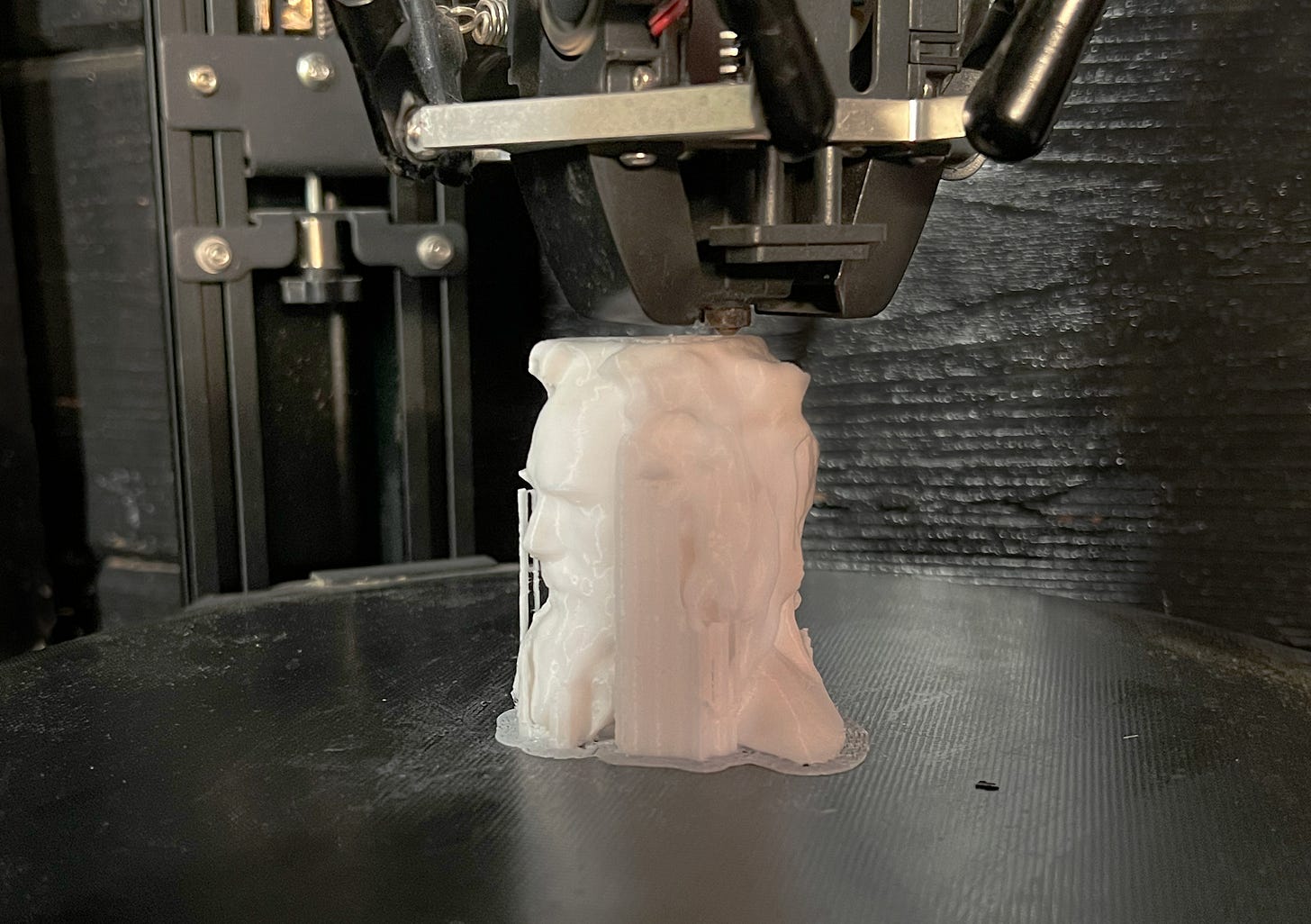

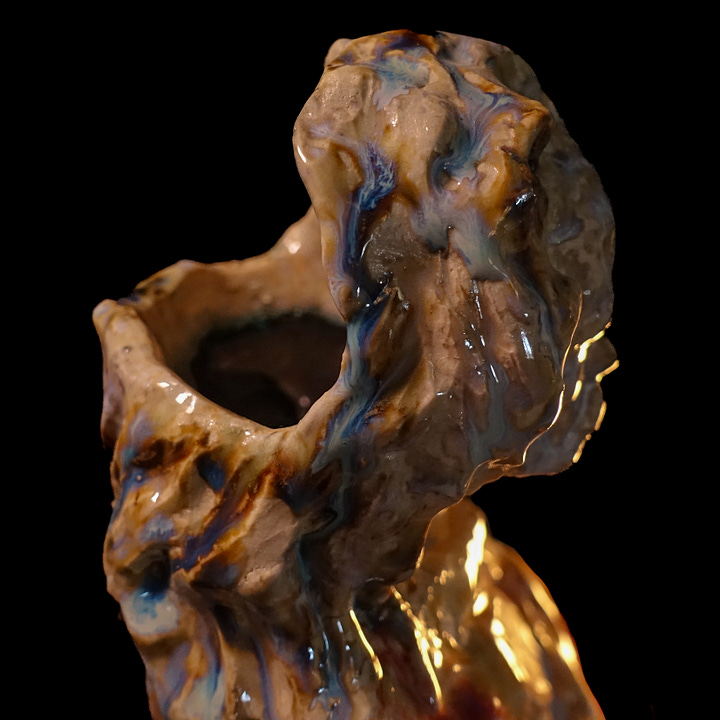

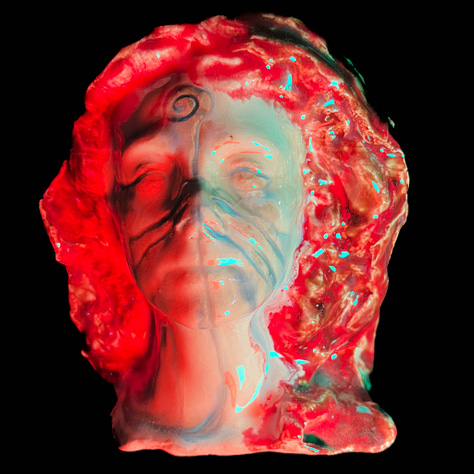

During Mars College 2023, I decided to explore these ideas. Using 3D scanning, I captured the likenesses of various participants of our educational/artistic experiment (we call ourselves "Martians"), including myself. I then 3D printed these scans, created silicone molds, and manipulated the clay figures, allowing for numerous variations of a person, just like we had collectively done with Shakespeare. For the Mars Finals show, I devised a video featuring an interview with Pseudo (our mysterious mathematician) and myself, though it wasn’t us who appeared talking but the animated 3d scanned ceramic versions of us. We were discussing infinity, surreal numbers, and mind uploading. This video, alongside all the sculptures, was displayed at the Little Martians shrine - an installation inside Mars main structure. The animation in the background was a collaboration between me and Xander Steenbrugge.

I realized that creating only heads was not enough to play with mind uploading concepts. Looking at an animated bodiless head of myself talk made me a bit uncomfortable. If I can train an AI model of myself I’d like it to have a body. The sculpture I animated from Pseudo had one, in meditation clothes, and I think the part of the video where he appears is far better than the one I do. So next year, I’ll make sure to give everyone some kind of body.

In a parallel project, Gene Kogan and Xander Steenbrugge trained image generators on Martian photos, cloned our voices (with varying degrees of success), and created language bots programmed with personality descriptions. Through the Eden.art web interface, people could write questions to these characters, who answered with videos. Gene curated some of the videos and made a short film for the Bombay Beach Biennale Film Festival.

Now, we aim to combine these two methodologies. I envisage an interactive 3D scanned mesh responding in a digital realm, with the sculpture - who have an NFC chip attached to a crypto wallet - accumulating the generated video NFTs like cherished memories. I hope to share a prototype from this project next month.

The purpose of this artistic endeavor is to experiment with what's currently achievable. I don’t believe mind uploading has a fixed clear meaning and I’m happy to explore its potential. So our fictional interactive uploaded minds would tell us stories about mind uploads, and by doing so they would, like every science-fiction, represent the current anxieties and desires about the future.

In the expansive annals of literature, art, and humanity, present-day AI tools represent yet another stride in amplifying our capabilities to render elements of our consciousness available for posterity. While it may not equate to complete mind uploading or immortality, it's a significant milestone in our pursuit of a rich, fulfilling life that yearns to share their wisdom with the wider world.

Last video is a StyleGAN3 experiment, trained on the Vanessa’s.